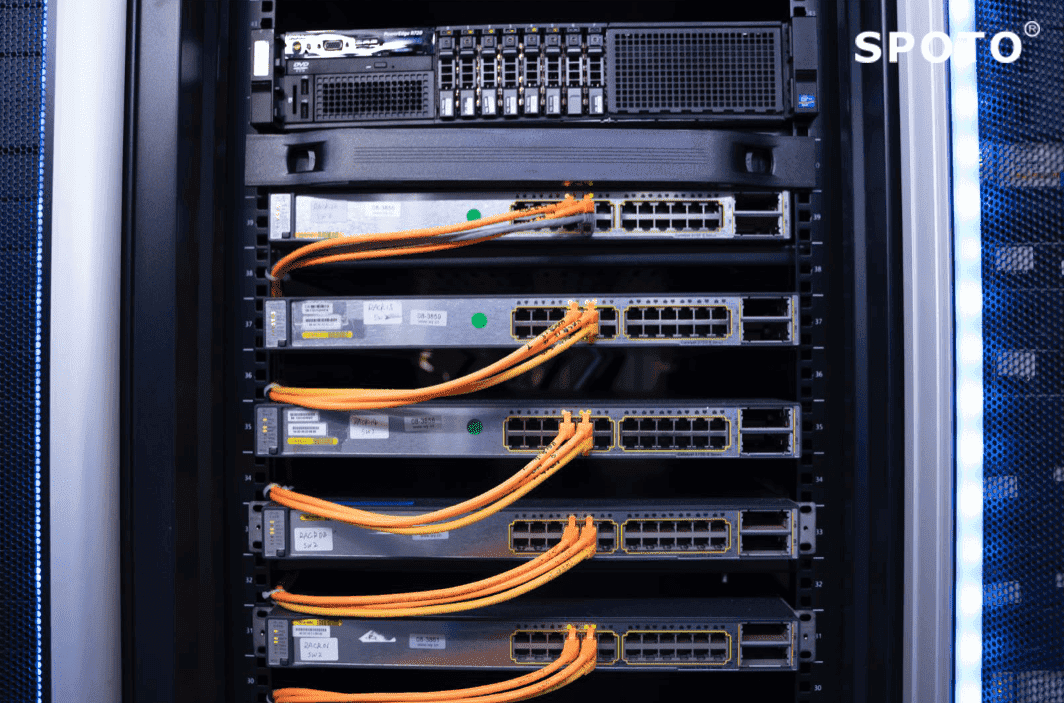

The first thing to be clear is that the access layer switch, the aggregation layer switch, and the core layer switch are not the types or attributes of the switch, but are divided by the tasks they perform. In terms of network topology, a computer network system structure needs to adopt a three-layer network architecture: access layer, aggregation layer, and core layer.

The core layer is the hub of the network and is of great importance. Therefore, the core layer switches should adopt Gigabit or even 10 Gigabit manageable switches with higher bandwidth, higher reliability, higher performance and throughput. Layer 3 switches based on IP addresses and protocols are commonly used in the core layer of the network, and are also used in small amounts in the aggregation layer. Some Layer 3 switches also have a Layer 4 switching function, which can perform target port judgment based on the protocol port information of the data frame.

Many weak friends have mentioned the selection of core switches, so let's talk about the main parameters of core switch selection today. There are mainly parameters such as scalability, forwarding rate, backplane bandwidth, four-layer switching, and system redundancy.

The core switches should all adopt a modular structure, must have a considerable number of slots, have strong network expansion capabilities, and can select modules of different numbers, different rates and different interface types according to actual or future needs, to adapt to ever-changing network requirements. .

Scalability

1. The number of slots. The slot is used to install various functional modules and interface modules. Since the number of ports provided by each interface module is fixed, the number of slots fundamentally determines the number of ports that the switch can accommodate.

In addition, all functional modules (such as Super Engine Module, IP Voice Module, Extended Service Module, Network Monitoring Module, Security Service Module, etc.) need to occupy one slot, so the number of slots fundamentally determines the scalability of the switch. Sex.

2. Module type. There is no doubt that the more supported module types (such as LAN interface modules, WAN interface modules, ATM interface modules, extended function modules, etc.), the more scalable the switch. Take the LAN interface module as an example, it should include RJ-45 module, GBIC module, SFP module, 10Gbps module, etc., to meet the needs of complex environments and network applications in large and medium-sized networks.

Forwarding rate

The data in the network consists of one packet, and the processing of each packet consumes resources. The forwarding rate (also called throughput) refers to the number of packets that pass in a unit of time without packet loss. Throughput is like the traffic flow of an overpass, which is the most important parameter of a three-layer switch, which indicates the specific performance of the switch. If the throughput is too small, it will become a network bottleneck, which will have a negative impact on the transmission efficiency of the entire network. The switch should be able to achieve wire-speed switching, ie the switching rate reaches the data transmission speed on the transmission line, thus minimizing the switching bottleneck. For a Gigabit switch, if you want to achieve non-blocking transmission of the network,

The formula is as follows:

Throughput (Mpps) = 10 Gigabit Ports × 14.88 Mpps + Gigabit Ports × 1.488 Mpps + 100 Megabit Ports × 0.1488 Mpps

If the switch's nominal throughput is greater than or equal to the calculated value, then the line rate should be reached at the three-layer exchange. among them,

The theoretical throughput of a 10 Gigabit port at a packet length of 64 B is 14.88 Mpps.

The theoretical throughput of a Gigabit port at a packet length of 64 B is 1.488 Mpps.

The theoretical throughput of a 100 Mbps port with a packet length of 64 B is 0.1488 Mpps.

So how do these values get?

In fact, the measure of packet forwarding line rate is based on the number of 64B packets (minimum packets) sent per unit time. Take the Gigabit Ethernet port as an example. The calculation method is as follows:

1,000,000,000 bps/8 bit/ (64+8+12) B =1,488,095 pps

When the Ethernet frame is 64 B, the fixed overhead of the frame header of 8 B and the frame gap of 12 B should be considered. It can be seen that the packet forwarding rate of the line rate Gigabit Ethernet port is 1.488 Mpps. The wire-speed port packet forwarding rate of 10 Gigabit Ethernet is exactly 10 times that of Gigabit Ethernet, which is 14.88 Mpps; while the Fast Ethernet wire-speed port packet forwarding rate is very high for Gigabit Ethernet. One of them is 0.1488 Mpps.

E.g:

For a switch with 24 gigabit ports, its full configuration throughput should be 8 × 1.488 Mpps = 35.71 Mpps to ensure non-blocking packet switching when all port line speeds are working. Similarly, if a switch can provide up to 176 Gigabit ports, its throughput should be at least 261.8 Mpps (176 × 1.488 Mpps = 261.8 Mpps), which is the true non-blocking architecture design.

Backplane bandwidth

Bandwidth is the maximum amount of data that can be handled between the switch interface processor or the interface card and the data bus, just like the sum of the lanes owned by the overpass. Since communication between all ports needs to be done through the backplane, the bandwidth that the backplane can provide becomes a bottleneck for concurrent communication between ports. The larger the bandwidth, the larger the available bandwidth provided to each port, and the faster the data exchange speed; the smaller the bandwidth, the smaller the available bandwidth for each port, and the slower the data exchange speed. That is to say, the bandwidth of the backplane determines the data processing capability of the switch. The higher the bandwidth of the backplane, the stronger the ability to process data. Therefore, the larger the backplane bandwidth, the better, especially for those aggregation switches and central switches. If you want to achieve full-duplex non-blocking transmission of the network, you must meet the minimum backplane bandwidth requirements. Its calculation formula is as follows:

Backplane bandwidth = number of ports × port rate × 2

Tip: For a Layer 3 switch, only the forwarding rate and backplane bandwidth meet the minimum requirements, which is a qualified switch, and both are indispensable.

For example, if a switch has 24 ports,

Backplane bandwidth = 24 * 1000 * 2 / 1000 = 48Gbps.

Four-layer exchange

Layer 4 switching is used to achieve fast access to network services. In Layer 4 switching, the decision is based on not only the MAC address (Layer 2 bridge) or the source/destination address (Layer 3 routing), but also the TCP/UDP (Layer 4) application port number, which is designed. Used in high speed intranet applications. In addition to the load balancing function, Layer 4 switching supports transport stream control based on application type and user ID. In addition, Layer 4 switches are placed directly on the front end of the server, which understands application session content and user rights, making it an ideal platform for preventing unauthorized access to servers.

Module redundancy

Redundancy is the guarantee for the safe operation of the network. No manufacturer can guarantee that its products will not malfunction during operation. The ability to switch quickly when a failure occurs depends on the redundancy of the device. For the core switch, important components should have redundancy, such as management module redundancy, power redundancy, etc., so as to ensure the stable operation of the network to the greatest extent.

Routing redundancy

The HSRP and VRRP protocols are used to ensure the load balancing and hot backup of the core devices. When a switch in the core switch and the dual aggregation switch fails, the Layer 3 routing device and the virtual gateway can quickly switch to implement dual-line redundancy backup. Ensure the stability of the whole network.

If you want to study more techniques of networks, you should choose SPOTO. SPOTO focus IT certification training for 16 years.

Join Telegram Study Group ▷

Join Telegram Study Group ▷